Overview

This article explains the unique terms of FineDataLink to help you use the product.

Function Module

FDL provides function modules including Data Development, Data Pipeline, Data Service, Task O&M, and others to meet a series of needs such as data synchronization, processing, and cleaning.

| Name | Positioning | Function Description |

|---|---|---|

Data Development | For timed data synchronization and data processing. | Develop and arrange tasks through SQL statements or visual operations. |

| Data Pipeline | For real-time data synchronization. | Synchronize data in real time with high performance in the case of large data volume or standard table structure. |

| Data Service | For cross-domain data transmission by releasing APIs. | Encapsulate the standardized data after processing as standard APIs and release them for external systems to call. |

| Task O&M | For the operation and maintenance of scheduled tasks, pipeline tasks, and data services. | Carry out unified management and operation monitoring of tasks and provide an overview of tasks. |

Data Development

Usually used with Task O&M, Data Development can define the development and scheduling attributes of periodic scheduled tasks. It provides a visual development page, helping you easily build offline data warehouses and ensuring efficient and stable data production.

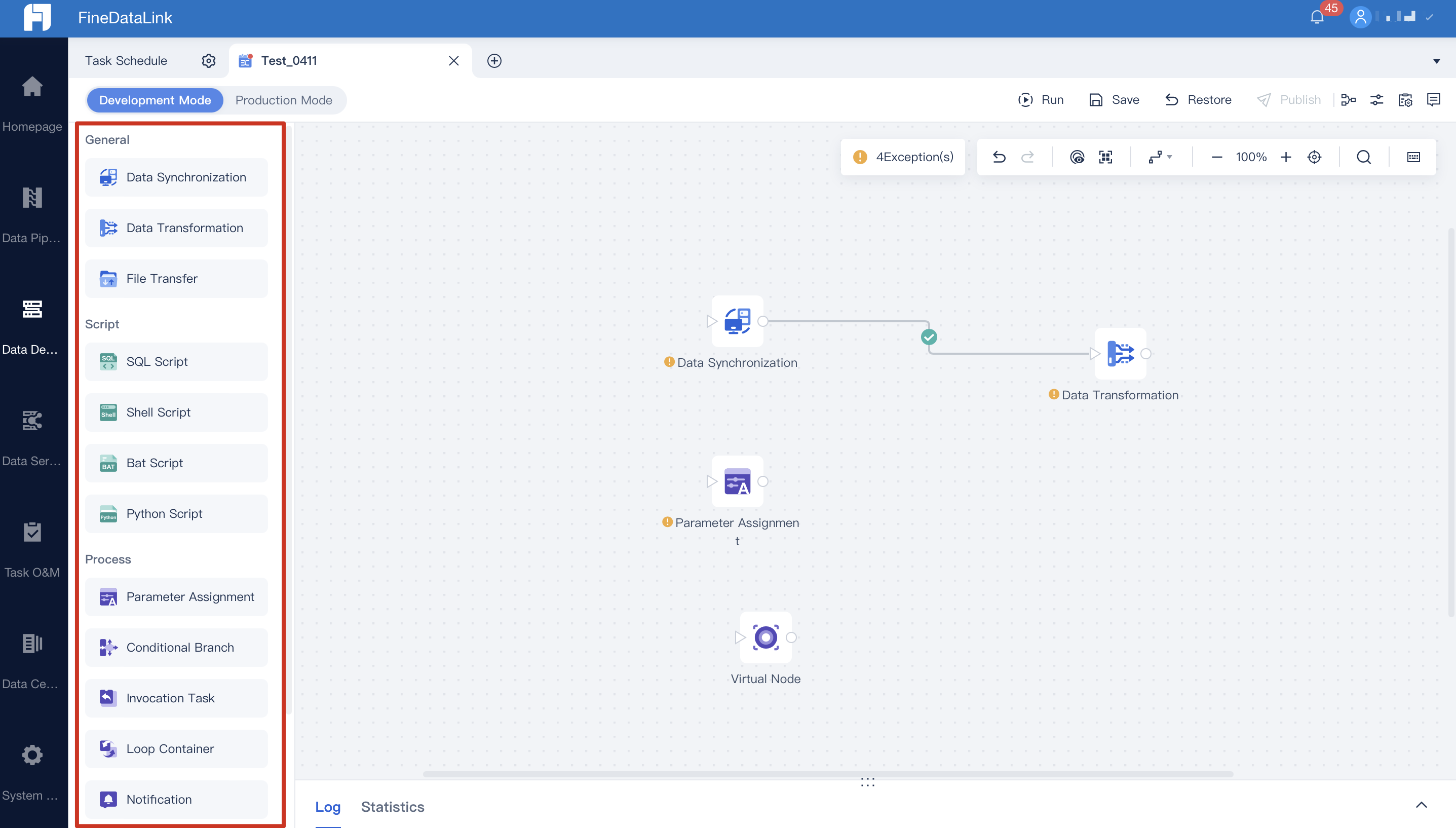

Data Development provides various nodes for you to choose from according to business needs, many of which support periodic task scheduling

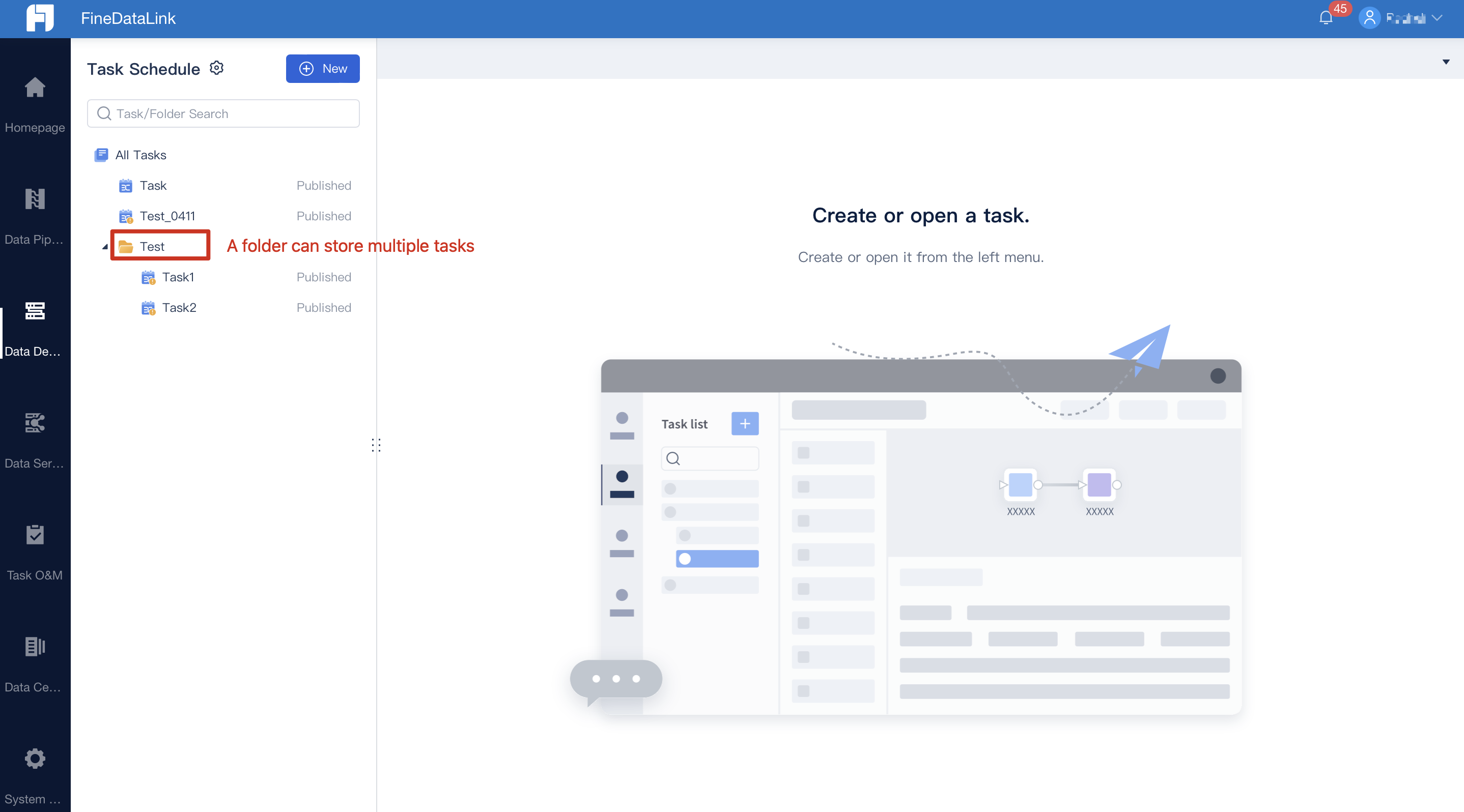

Folder

It is used to store data development tasks and a folder can store multiple scheduled tasks.

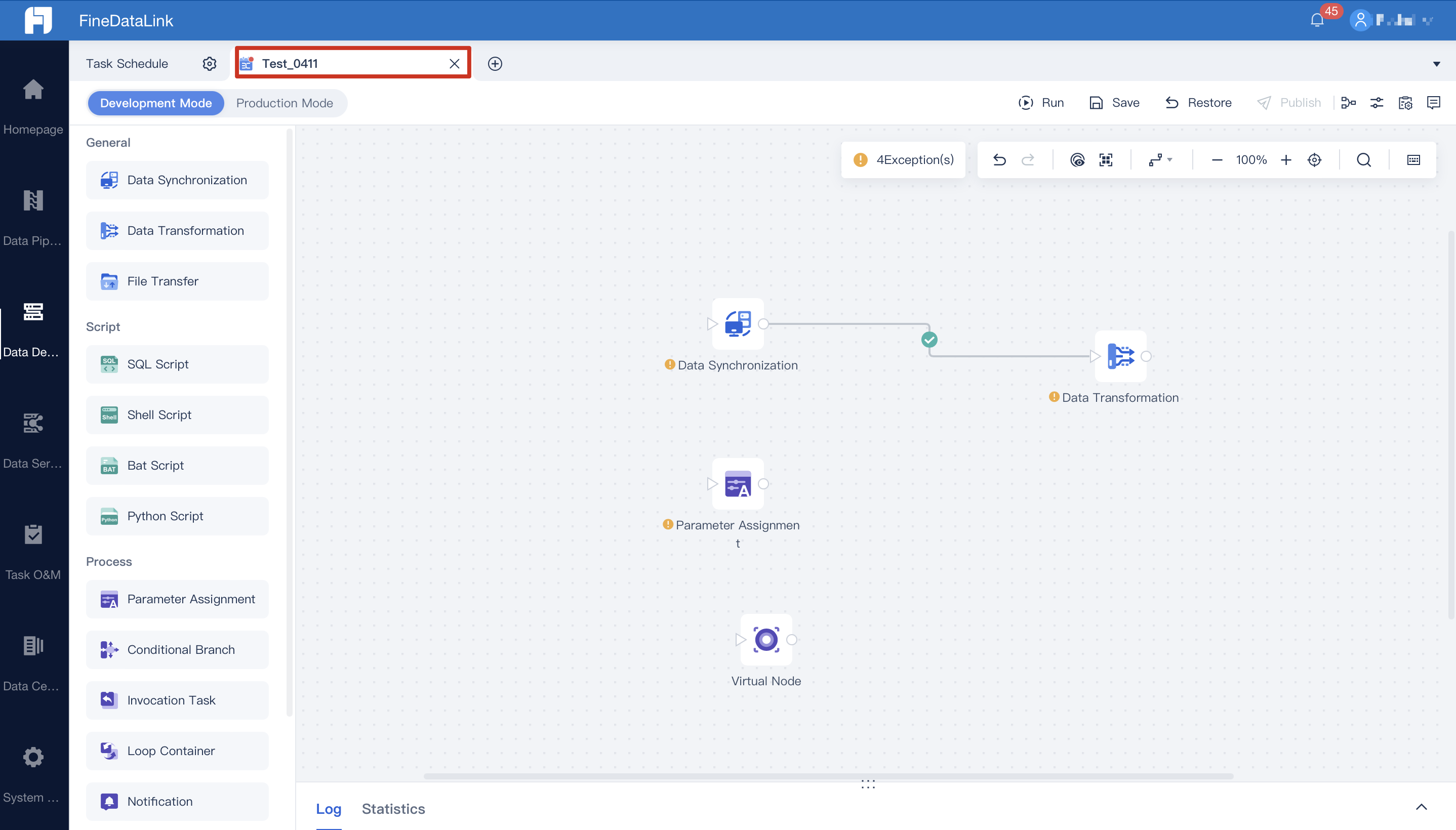

Scheduled Task

Scheduled Task defines the operations performed on data. For example:

Synchronize data from MySQL to Oracle through a task using the Data Synchronization node.

Parse API data and store them in a specified database through Data Transformation, Loop Container, and other nodes.

A scheduled task can consist of a single data node or process node, or be a combination of multiple nodes.

Node

A node is a basic unit of a scheduled task. You can determine the execution order of nodes by connecting them with lines or curves, thus forming a complete scheduled task. Nodes in a task run in turn according to the dependencies.

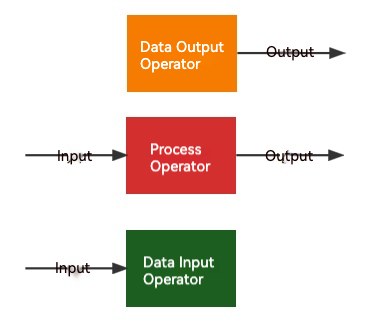

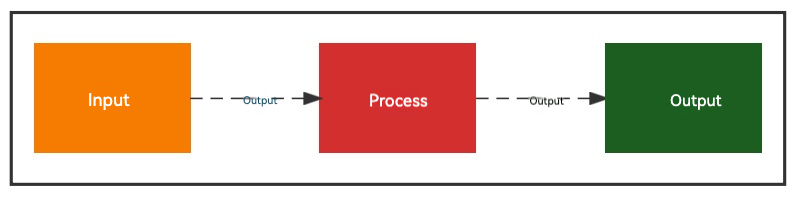

Data Flow

ETL processing can be carried out in the Data Transformation node. With encapsulated visual functions, you can achieve data cleaning and loading efficiently.

| Positioning | Functional Boundary |

|---|---|

It refers to the data flow between the input widgets and the output widgets, focusing on the processing of each row of records and each column of data. There are various operators for completing data input, output, transformation, and other operations. | A data flow (a data transformation node) only provides the following three types of operators, and does not contain combinatorial and process-type operators:

|

Terms involved in the Data Transformation node are explained in the following table.

Classification | Function Point | Type | Positioning | Function Description | Initial Version |

|---|---|---|---|---|---|

| Data Input | DB Table Input | Basic | For reading the data of the database table. | / | 3.0 |

| API Input | Advanced Data Source Control - API Protocol (Timed) | For reading data through APIs. | / | 3.0 | |

| Dataset Input | Basic | For reading the data in the server dataset or self-service dataset. | / | 3.0 | |

| Jiandaoyun Input | Advanced Data Source Control - App Connector (Timed) | For backing up, calculating, analyzing, and displaying data from Jiandaoyun. | Obtain data from specified Jiandaoyun forms. | 4.0.2 | |

| MongoDB Input | Advanced Data Source Control - NoSQL | For accessing MongoDB and processing data. | / | 4.0.4.1 | |

| SAP RFC Input | Advanced Data Source Control - App Connector (Timed) | For calling the developed functions and extracting data of the SAP system through the RFC interface. | / | 4.0.10 | |

| File Input | Advanced Data Source Control - File System | For reading the data from a structured file data in a specified source and path. | Obtain data by reading structured files in specified sources and paths. | 4.0.14 | |

| Data Output | DB Table Output | Basic | For outputting data to the database table. | / | 3.0 |

| Comparison Deletion | / | For synchronizing the data reduction in the source table to the target table. | Delete the data rows that exist in the target table but have been deleted in the input source by comparing field values. It includes:

| 3.2 Note: This operator has been deleted since Version 4.0.18. | |

| Parameter Output | Basic | For outputting the obtained data as the parameter for use by downstream nodes. | Output the data as the parameter for use by downstream nodes in a task. | 4.0.13 | |

| API Output | Advanced Data Source Control - API Protocol (Timed) | For outputting data to APIs. | / | 4.0.19 | |

| Jiandaoyun Output | Advanced Data Source Control - App Connector (Timed) | For outputting data to the Jiandaoyun form. | Upload the data to the specified Jiandaoyun form. | 4.0.20 | |

| File Output | Advanced Data Source Control - File System | For outputting the data as a file. | Output data to structured files in specified destinations and paths. | 4.0.26 | |

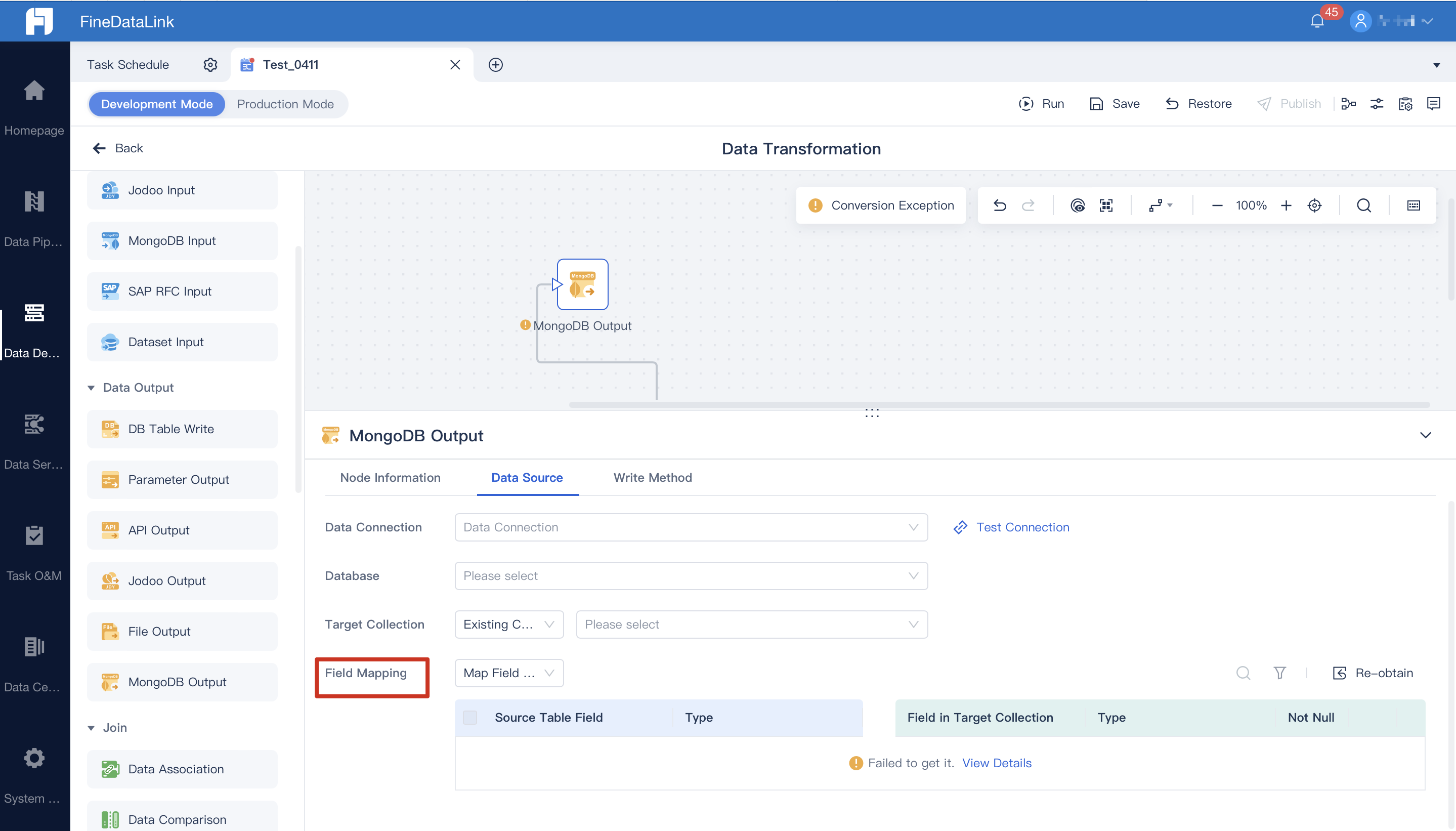

| MongoDB Output | Advanced Data Source Control - NoSQL | For outputting data to MongoDB. | Output data to MongoDB. | 4.1.6.4 | |

Join | Data Association | Advanced | For connecting multiple inputs and outputting the connected results. Support cross-database and cross-source connections. | The join methods include:

These join methods are consistent with how database tables are joined. You can get the join results by defining the association fields and conditions. It requires more than two inputs and only one output. | 3.1 |

Data Comparison | Advanced | For comparing two inputs and getting new, deleted, same, and different data. | Steps:

| 4.0.18 | |

Union All | Advanced | / | Merge multiple tables by rows, and output a combined table. | 4.1.0 | |

Transformation | Field Setting | Advanced | For adjusting the field name and type. | It provides the following functions:

| 3.7 |

Column to Row | Advanced | For changing the row and column structure of the data table to meet the needs of conversion between one-dimensional tables and two-dimensional tables. | Convert columns in the input data table to rows.

| 3.5 | |

Row to Column | Advanced | For converting the rows in the data table to columns. | Convert rows in the input data table to columns.

| 4.0.15 | |

JSON Parsing | Basic | For parsing JSON data and output data in the format of rows and columns. | Obtain JSON data output by the upstream node, parse them into row-and-column format data, and output parsed data to the downstream node. | 3.5 | |

XML Parsing | Advanced | For specifying the parsing strategy and parsing the input XML data into row-and-column format data. | Specify the parsing strategy and parse the input XML data into row-and-column format data. | 4.0.9 | |

JSON Generation | Advanced | For selecting fields to generate JSON objects. | Select fields and convert table data into multiple JSON objects, which can be nested. | 4.0.19 | |

New Calculation Column | Advanced | For generating new columns through calculation. | Support formula calculation or logical mapping of constants, parameters, and other fields, and place the results into a new column for subsequent operations or output. | 4.0.23 | |

Data Filtering | Advanced | For filtering eligible data records. | Filter eligible data records. | 4.0.19 | |

Group Summary | Advanced | For aggregation calculation according to specified dimensions. | It refers to merging the same data into one group based on conditions and summarizing and calculating data based on the grouped data. | 4.1.2 | |

| Field-to-Row Splitting | Advanced | For splitting field values according to specific rules (delimiters), where the split values form a new column. | 4.1.2 | ||

Field-to-Column Splitting | Advanced | For splitting field values according to specific rules (delimiters or the number of characters), where the split values form multiple new columns. | 4.1.2 | ||

Laboratory | Spark SQL | Advanced | For improving scenario coverage by providing flexible structured data processing. | With the built-in Spark calculation engine and Spark SQL operators, you can obtain the data output by the upstream node, query and process them using Spark SQL, and output them to the downstream node. | 3.6 It can be used as an input operator in V4.0.17. |

| Python | Advanced | / | For running Python scripts to process complex data. | 4.0.29 | |

Step Flow

A step flow is composed of nodes.

Positioning | Functional Boundary |

|---|---|

A step flow, also called workflow, is the arrangement of steps. Each step is relatively independent and runs in the specified order without the flow of data rows. | Each step is a closed loop from input to output.

|

The terms involved in a step flow are explained in the following table.

Classification | Function Point | Type | Positioning | Function Description | Initial Version |

|---|---|---|---|---|---|

General | Data Synchronization | Basic | For completing data synchronization (between input and output) quickly. Data transformation is not supported during data synchronization. | Support multiple methods to fetch data, such as fetching data by API, SQL statement, and file. Memory calculation is not required as there is no data processing during the process. It applies to the following scenarios where:

| 1.0 |

Data Transformation | Advanced | For transforming and processing data between the input and output nodes. Note: The Data Transformation node can be a node in a step flow. | Complex data processing such as data association, transformation, and cleaning between table input and output nodes is supported based on data synchronization. Data Transformation is a data flow in essence but relies on the memory computing engine since it involves data processing. It is suitable for data development with small data volumes (ten million records and below), and the computing performance is related to memory configuration. | 3.0 | |

Script | SQL Script | Basic | For issuing SQL statements to the specified relational database and execution. | Write SQL statements to perform operations on tables and data such as creation, update, deletion, read, association, and summary. | 1.0 |

Shell Script | Advanced | For running the executable Shell script on a remote machine through the remote SSH connection. | Run the executable Shell script on a remote machine through a configured SSH connection. | 4.0.8 | |

Python Script | Advanced | For running Python scripts as an extension of data development tasks. For example, you can use Python programs to independently process data in files that cannot be read currently. | Configure the directory and input parameters of Python files, and run Python scripts through the SSH connection. | 4.0.28 | |

Bat Script | Advanced | For running the executable Batch script on a remote machine through the remote connection. | Run the executable batch script on a remote Windows machine through a configured remote connection. | 4.0.29 | |

Process | Conditional Branch | Basic | For conducting conditional judgment in a step flow. | Determine whether to continue to run downstream nodes based on a condition from upstream nodes or the system. | 2.0 |

Task Call | Basic | For calling other tasks to complete the cross-task arrangement. | Call any task and place it in the current task. | 3.2 | |

Virtual Node | Basic | Null operation. | A virtual node is a null operation and functions as a middle point to connect multiple upstream nodes and multiple downstream nodes. It can be used for process design. | 1.4 | |

Loop Container | Advanced | For the loop execution of multiple nodes. | Provide a loop container that supports For loops and While loops, allowing nodes in the container to run circularly. | 4.0.3.1 | |

Parameter Assignment | Basic | For outputting the obtained data as the parameter. | Output the read data as the parameter for use by downstream nodes. | 1.7 | |

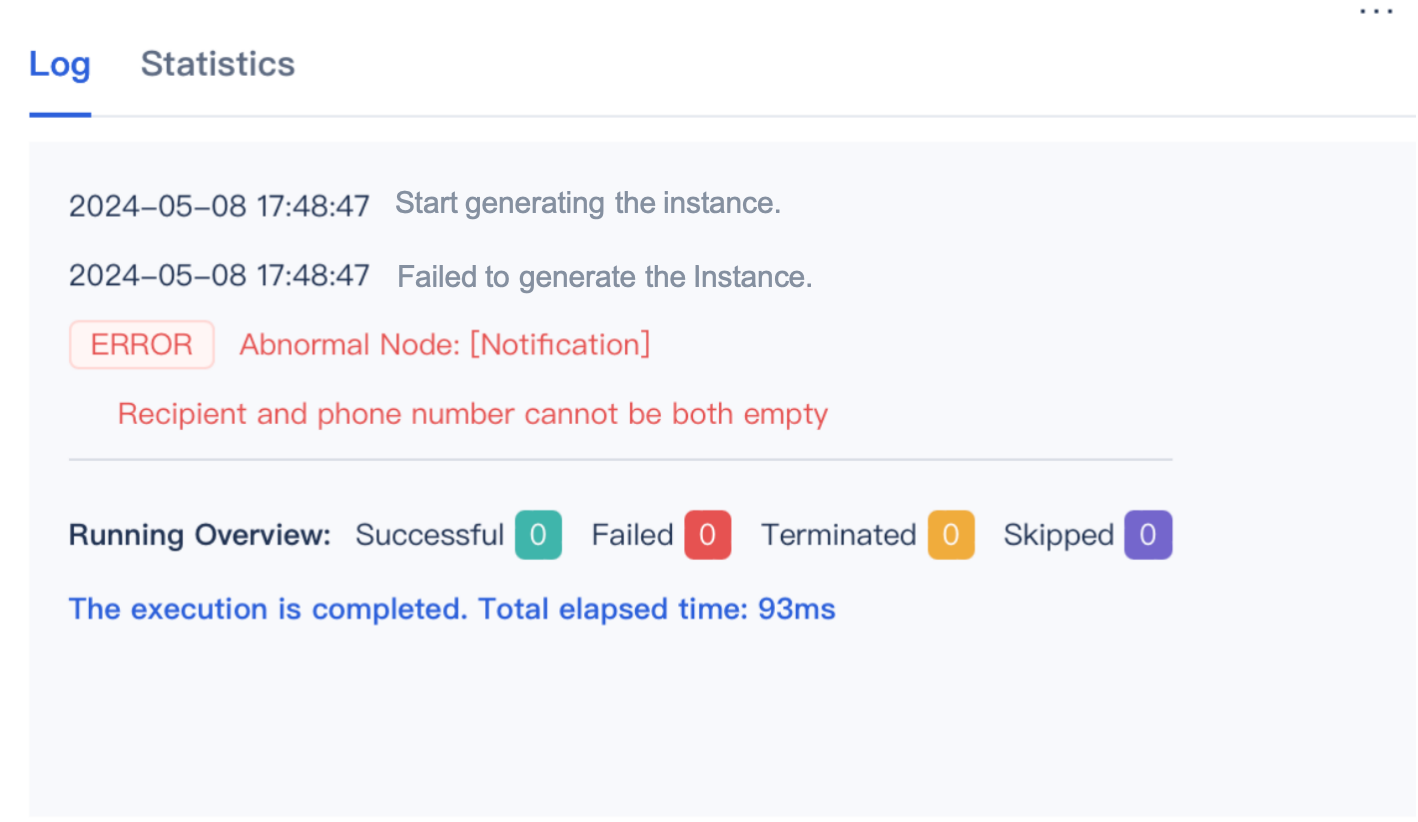

Notification | Notification | Basic | For customizing notification content and channels. | Notifications can be sent through email, SMS, platform, enterprise WeChat (group robot notification and App notification), and DingTalk. You can customize the notification content. | V3.6 V4.0.1 V4.0.3 |

Connection | Execution Judgment on Connection | Basic | For setting the execution logic of upstream and downstream nodes. | Right-click a connection line in a step flow and configure the execution condition, with options including Execute Unconditionally, Execute on Success, and Execute on Failure. Right-click a node in a process flow, and click Execution Judgment. You can customize the method for multiple execution conditions to take effect (All and Anyone) to control the dependencies of nodes in the task flexibly. | 4.0.3 |

Others | Remark | Basic | For adding notes to the task in the canvas. | You can customize the content and format. | 4.0.4 |

Field Mapping

Field Mapping allows you to view and modify the relationship of fields in the source table and the target table to set the data write rules for the target table.

Concurrency

Concurrency refers to the number of scheduled tasks and pipeline tasks running simultaneously in FineDataLink.

To ensure the high performance of concurrent transmission, the number of CPU threads can be slightly greater than twice the number of concurrent tasks.

Task Instance

Each time a scheduled task runs, an instance will be generated, which can be viewed in Running Record.

Start Time of Instance Generation

When a task is running, the log will record the time when the instance starts to be generated.

If you have set the execution frequency for a scheduled task, the instance generation may start slightly later than the set time. For example, if the task is set to run at 11:00:00 every day, the instance generation may start at 11:00:02.

Data Pipeline

Data Pipeline provides a real-time data synchronization function that allows you to perform table-level and database-level synchronization. Data changes in some or all tables in the source database can be synchronized to the target database in real time to keep data consistent.

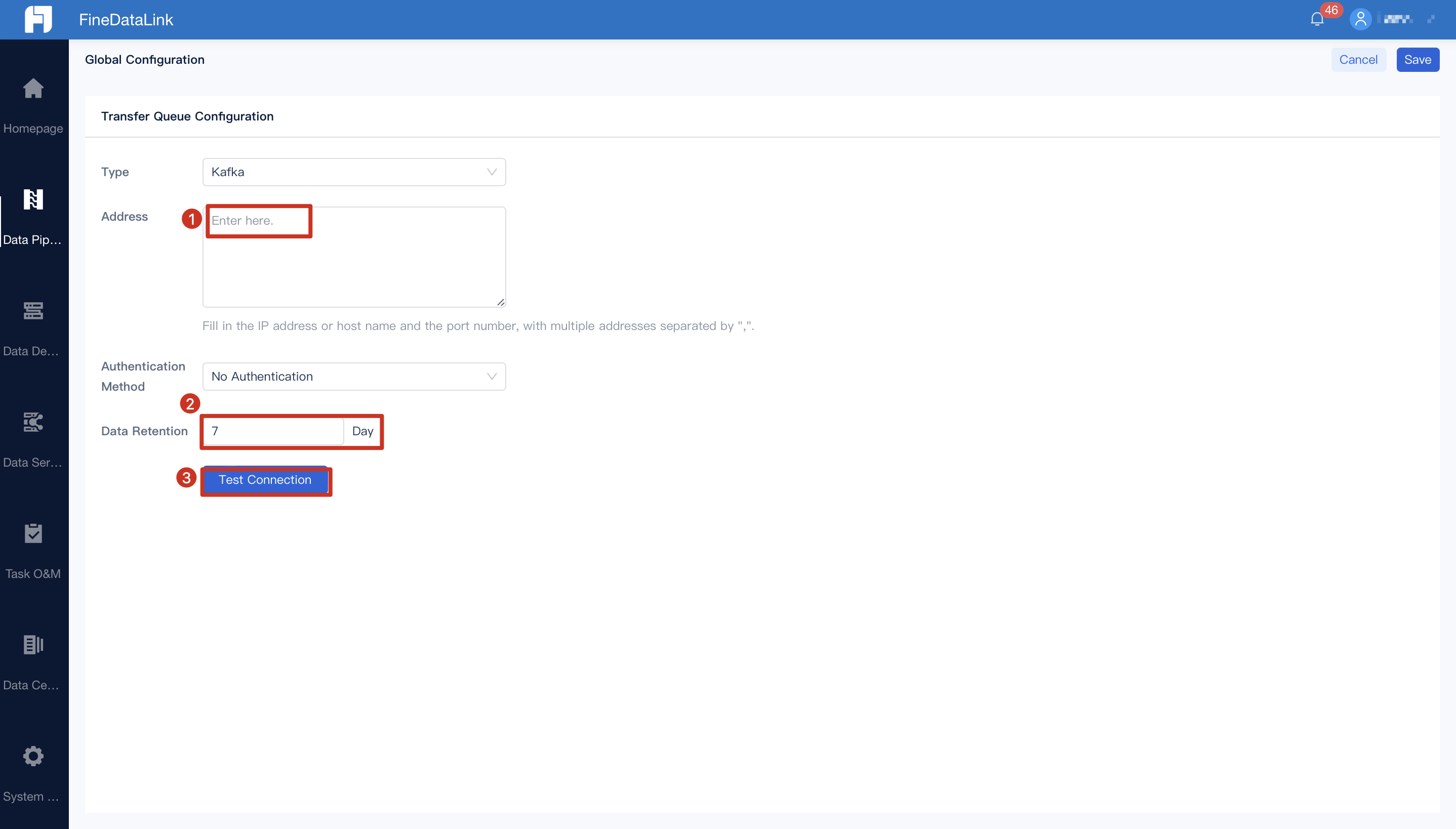

Transmission Queue

In the process of real-time synchronization, data from the source database are temporarily stored in Data Pipeline for efficiently writing data to the target database.

Therefore, it is necessary to configure a middleware for temporary data storage before setting up a data pipeline task. FineDataLink uses Kafka as a middleware for data synchronization to temporarily store and transmit data.

Dirty Data

Definition of Dirty Data in Scheduled Task

1. Data that cannot be written into the target database due to a mismatch between fields (such as length/type mismatch, missing field, and violation of non-empty rule).

2. Data with conflict primary key in case the Strategy for Primary Key Conflict in Write Method is set to "Record as Dirty Data If Same Primary Key Exists".

Definition of Dirty Data in Pipeline Task

The dirty data in pipeline tasks refer to those that cannot be written into the target database due to a mismatch between fields (such as length/type mismatch, missing field, and violation of non-empty rule).

Note: Primary key conflict in pipeline tasks does not produce dirty data as the new data will overwrite the old ones.

Data Service

Data Service enables the one-click release of processed data as APIs, facilitating cross-domain data transmission and sharing.

APPCode

APPCode is a unique API authentication method of FineDataLink and can be regarded as a long-term valid Token. If set, it will take effect on a specified application API. To access the API, you need to specify the APPCode value in the Authorization request header in the format of APPCode + space + AppCode Value.

For details, see Binding API to Application.